Governance in Practice: PGF + Djobu at Work

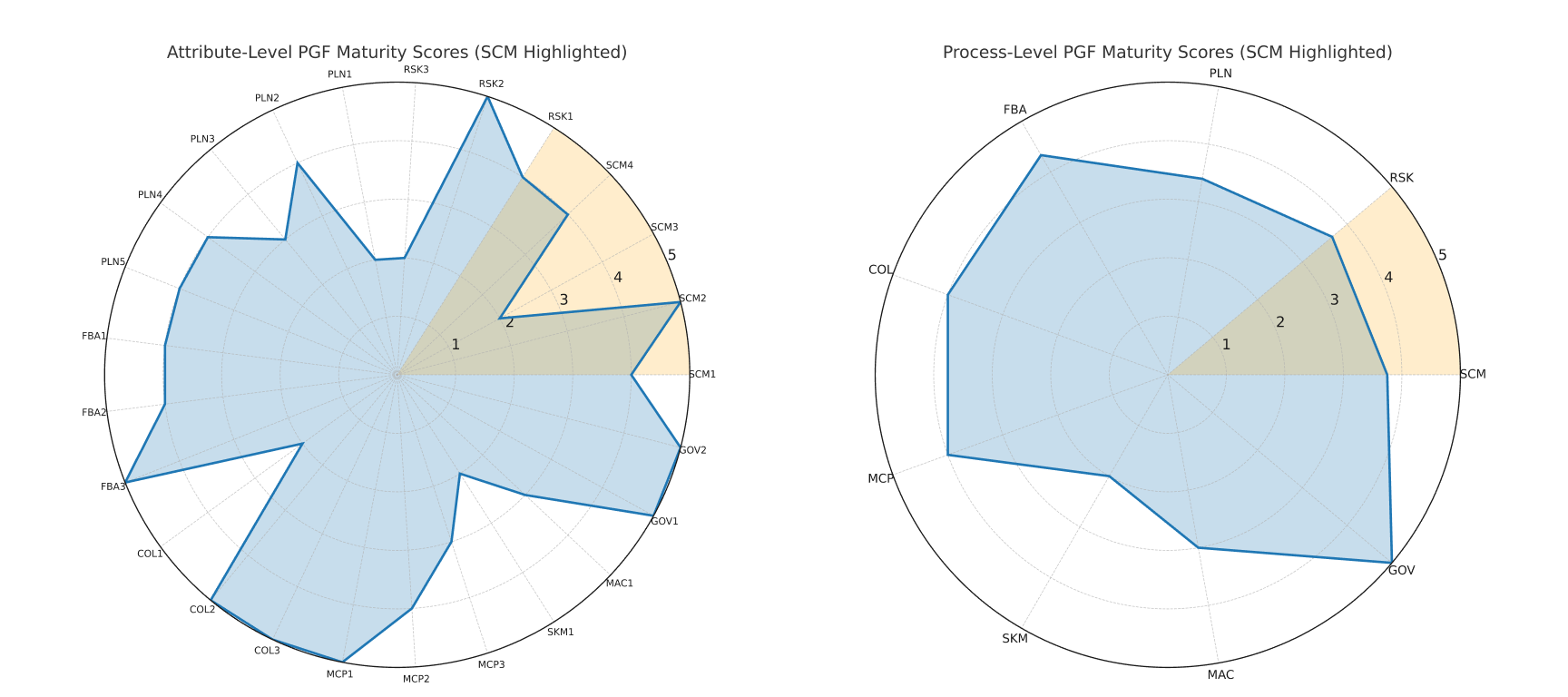

PGF scores show where governance processes are strong and where they fall short—so improvement efforts can focus where they deliver the greatest impact.

What you’re looking at

A snapshot of how PGF scores the “Requirements Are Defined” attribute. Each row is a criteria, and the score reflects how well project data met the underlying test.

Why it matters

Scores highlight where process attributes are not meeting the goal. This makes it easy to see which improvements will deliver the greatest impact right now, helping teams prioritize effort where it counts most.

Next: How the Data Flows

Let’s zoom in on SCM1.C2. Here’s how Djobu captures requirements once, transforms them into the PGF requirementsRegister, and enables the test behind this score to run automatically.

SCM1 — Requirements Are Defined

| CRITERIA | DESCRIPTION | SCORE |

|---|---|---|

| SCM1.C1 | A requirements list exists for each project in the governance system. |

5 / 5

|

| SCM1.C2 | Each requirement is uniquely identified and traceable to its source. |

4 / 5

|

| SCM1.C3 | Requirements are classified by type and prioritized for management control. |

4 / 5

|

How Criteria Tests Generate Attribute Scores

What you’re seeing

A portion of the PGF-T02 · requirementsRegister table. This register is the input PGF uses to score SCM1.C2 — “Each requirement is uniquely identified and traceable to its source.”

How the score is derived

The test checks what percent of requirements have both:

A valid unique

requirementIDA populated

sourceID

Coverage = valid ÷ total. Example: 82 of 102 requirements passed → 80.4% coverage → score = 4/5.

Beyond a single test

Not all criteria are this simple. Some require multiple tests, each checking different aspects of the data (e.g., presence, linkage, timeliness). PGF then applies scoring rules — such as weighted averages, gating rules, or composite thresholds — to translate those test results into a single maturity score.

| Requirement ID | Source | Requirement | Priority | Type |

|---|---|---|---|---|

| ✅REQ-001 | ✅MIL-STD-881 Work Br... | System shall capture all customer requirements in a governed register... | High | Contractual |

| ✅REQ-002 | ✅SOW Section C 2.3.1.4... | System shall provide automated unique ID assignment for each requirement... | Medium | Stakeholder |

| ✅REQ-003 | ✅BRD v2 §7 Traceability... | System shall allow traceability from requirements to Work Packages... | Low | Internal |

| ✅REQ-004 | ✅Contract Mod 03 §4.2... | System shall generate status outputs filtered by requirement classification... | High | Contractual |

| ✅REQ-005 | ✅QMS-PROC-12 Owner Resp... | Each entry shall include an accountable owner for governance follow-up... | Medium | Internal |

| ✅REQ-006 | ❌ | Unique ID enforcement must prevent duplicates at save time... | High | Contractual |

| ✅REQ-007 | ✅IT-INT-014 API Spec... | Register shall expose OData endpoint for downstream PGF tests... | Low | Internal |

| ✅REQ-008 | ✅Stakeholder Memo 05... | Requirement text must be atomic (single requirement per row)... | Medium | Stakeholder |

How Project Data Flows Into PGF

PGF is source‑agnostic: requirements (for example) can come from DOORS, SharePoint, Excel—any system. Before tests can run, data is transformed into the PGF schema. Ad‑hoc projects do this in Excel/Power Query; with Djobu, the source structure (tms_Requirement) and the PGF mapping are built in, so PGF‑T02 · requirementsRegister is kept current automatically.

- DOORS

- SharePoint

- Excel / CSV

- Custom DB

- Field mapping

- Data cleanup

- Validation

- Enforced fields

- Consistent schema

- Single source of truth

- Automated

- Error‑free

- No rework

{

"PGF-T02: requirementsRegister": [

{

"requirementID": "REQ-001",

"scopeEntityID": "PRJ-001",

"requirementText": "System shal...",

"type": "Contractual",

"priority": "High",

"owner": "Systems Eng"

},

{

"requirementID": "REQ-002",

"scopeEntityID": "PRJ-001",

"requirementText": "Interface must...",

"type": "Stakeholder",

"priority": "Medium",

"owner": "PMO"

},

...

]

}

Monitoring Process Maturity Over Time

The PGF score summary shows maturity results by process and attribute across reporting cycles. These results give PMO leaders a direct view of where governance processes are strong and where they need attention.

Without automation, keeping this view current quickly becomes unmanageable—every cycle would mean repeating non-standard tests, manual data pulls, and ad-hoc transformations. With Djobu, the tests are built into daily work, so scores are updated continuously and monitoring becomes effortless.